Curved screen projection with Isadora |

|

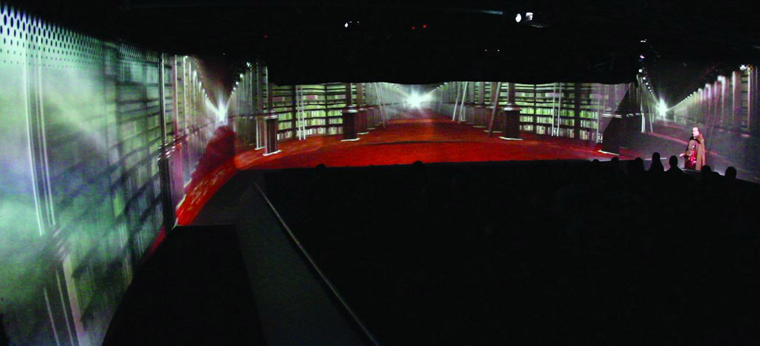

The recent show Spy Garbo involved projection on a 140' long x 13' high curved projection screen, using 9 edge-blended projectors to achieve a seamless image. Since projecting onto a curved surface produces distortion, it was necessary to come up with a way to correct this and make the image appear flat. Jeff Morey and I had already dealt with a similar situation in our work on the American Woman exhibition at The Metropolitan Museum of Art - in which we projected 360 degrees, floor to ceiling in an oval room. |

Spy Garbo scenic design (Neal Wilkinson) |

We experimented with many methods to pre-distort the content to compensate for the curved walls (settling on using 3D Studio Max), but even though we were only dealing with a single 4 minute animation, the pre-distortion process for a 6 projector blend was extremely time consuming, and had to be re-rendered every time content changes were made. |

|

|

Spy Garbo on the other hand was a full-length play with at least 80 minutes of video content so it was clear from the start that we had to find a better solution. At the time of The Met project, Isadora (our software of choice for both pieces) did not have a way of applying curved distortion in real time, but all that changed with v1.3 and the 3D Mesh Projector actor. |

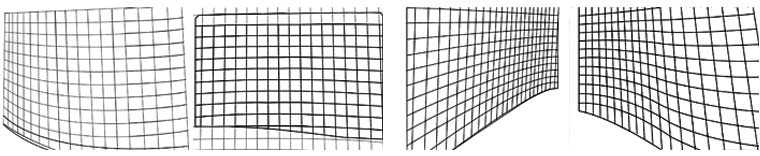

We then traced the portion of the grid-points 'visible' to each of the 9 projectors, resulting in 9 grids. We did the tracing using Photoshop in full-screen mode. |

|

2. Tracing the traced gridsIsadora's 3D Mesh Projector actor works by using an external data file to describe the distortion. It is essentially a text file containing a series of coordinates, and it is based on the work of Paul Bourke, Associate Professor at the University of Western Australia and general projection geometry guru. My mission became figuring out how to translate the bitmap-versions of the grids into X/Y coordinates which could be read by the Mesh Projector actor. After exploring many different options, I finally hit upon a useful little app called GetData, which is essentially a way of manually digitizing graphs and grids and outputting the results as numbers. A fair amount of manual mouse-labor resulted in a bunch of X/Y data for each of the 9 sections of the screen. |

GetData screenshot |

3. Deciphering the data fileHere's what a portion of the .data file looks like: 1 When it came to producing a correctly formatted data file, I trawled Paul Bourke's website for some insight into how things worked, and sure enough he explains that these numbers correspond to:

More details on this can be found on Paul Bourke's site. |

Columns E-I contain the actual data that is exported and consist of the normalised screen coordinates (XY data with the above formulae applied) and the UV 'texture' coordinates. To my chagrin, I later figured out that I had missed a vital and obvious detail: the horizontal coordinates should be -ASPECT to ASPECT (as Mr Bourke had originally stated), not 0-1 as I mistakenly assumed. The other thing to note is that the values are arranged vertically in groups of 18 (the number of columns in my original grid) and there are 13 of these groups stacked on top of each other (the number of rows in my grid). You can see the grey/white alterating pattern in the screenshot. |

5. Isadora at last! |

|

OK so here's the moment we've been waiting for! Fire up Isadora and drag a Movie Player and a 3D Mesh Projector on to the stage. |

Isadora screenshot |

|

Whether this is a bug, or the result of some flaw in my methodology I don't know, but this is what ended up working for me. I also tweaked the Image Scale, Shift Horizontal and Shift Vertical values once I got into the space. |

|

Lastly, I haven't covered the process by which we divided up the content to fit exactly into each of the 9 projection areas. Briefly put, we created a giant After Effects composition at our full resolution of 8060x768 (derived from the aspect ratio of the actual screen) then nested it inside 9 individual 1024x768 comps. We then nudged and scaled each one to compensate for variations in projector positions. The resulting After Effects composition became our template, and all we had to do in order to chop up our content into perfectly scaled and aligned sections, was to set up output module presets, drop our content into the master composition and hit render. | After Effects screenshot |

|

Here's a shot from the final show: Spy Garbo library scene |